AWS Lambda guide part II – Access to S3 service from Lambda function

In previous chapter I talked a little what is AWS Lambda and idea behind serverless computing. Furthermore I presented small Python application I wrote to sign certificate requests using my CA authority certificate (how to create such you can find in my post How to act as your own local CA and sign certificate request from ASA). Then after importing the sandboxed Python environment (required because of non-standard library used for SSL, whole procedure is described in my post How to create Python sandbox archive for AWS Lambda) and small change in the code we managed to execute it in Lambda. Also I mentioned that we can use other AWS services in our code, in example Access to S3 service from Lambda.

As you remember the initial version of my application have static paths to all files and assume that it can open it from folders on local hard drive. If you run function in Lambda you need a place where you can store files. This place is AWS S3. In this chapter I show you how to use S3 service in function on Lambda. We will use boto3 library that you can locally install on your computer using pip.

Setting up S3 service

Let’s start with configuring AWS for our Lambda function. We will use:

- IAM for permissions management

- S3 for storage

- Lambda for function execution

In this chapter we will keep it really simple so we won’t add any interaction with user yet. All we want to do is let our function to access specified objects stored in S3 bucket.

First of all let’s start with S3 storage service configuration. I’m creating S3 bucket called ‘certsigninglambdabucket’ with versioning enabled. I leave all other options to default

Sample S3 Bucket the we will use for Lambda function

Now I need to upload 3 files which is my Signing Certificate, Key and request – those are exactly the same files that are statically specified in code.

Files that we will use in our Lambda function

Properties of files we will use in Lambda function

I put those files on Reduced Redundancy class and I also encrypted them using internal AWS S3 encryption service but it doesn’t really matter – you will get same result using your preferred settings.

Using AWS services in Python

AWS provide dedicated Python library that we can use if we want to access AWS service from our application no matter if we run it serverless or on own computer. The library name is boto3. It’s available in all Linux distributions or can be obtained via pip.

I don’t like wasting my time writing functions, methods or libraries if there are such already available on Internet or in original packages. When working with AWS products we need to handle exceptions. We can write our own exception parsers depended on JSON structure we receive in response or we can use another good library available for Python called botocore.exceptions.

Both boto3 and botocore are available on Lambda by default therefor we can use them out of the box.

IAM Policy

Another aspect we need to think of is security. As AWS user you are responsible for security of files you store in S3, execution of Lambda functions, access to other resources etc, hence this aspect cannot be ignored. In AWS by default everything is forbidden unless we allow it by IAM policy. In previous chapter when I created Lambda function I let AWS to create new role and I assigned it a name myFirstLambdaFunctionRole. I also selected that this role use template Simple Microservice permissions. Let’s look in IAM console what exact permissions were assigned using this template.

IAM Policies generated from Lambda role template

Those polices let Lambda function access DynamoDB and write logs to CloudWatch. You can check this by clicking Show Policy. DynamoDB access is inherited from AWSLambdaMicroserviceExecutionRole while CloudWatch is added to every role by default from AWSLambdaBasicExecutionRole.

Let’s create new role for new version of our Lambda function. This role will give us access to S3 service from Lambda and provide basic security. Permissions we need to assign are:

- Access to CloudWatch based on AWSLambdaBasicExecutionRole

- Limited access to S3 bucket objects

- Read-only to CA certificate and key file

- Read and Delete to certificate requests files

- Read, Write and Delete to signed certificates files

First I will create policy CertSigningLambdaS3Policy for S3 bucket

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1497304470000",

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::certsigninglambdabucket/root-ca.key"

]

},

{

"Sid": "Stmt1497305201000",

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::certsigninglambdabucket/signing-ca.crt"

]

},

{

"Sid": "Stmt1497305262000",

"Effect": "Allow",

"Action": [

"s3:DeleteObject",

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::certsigninglambdabucket/*.csr"

]

},

{

"Sid": "Stmt1497305306000",

"Effect": "Allow",

"Action": [

"s3:DeleteObject",

"s3:GetObject",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::certsigninglambdabucket/*.crt"

]

}

]

}

Please note that I used wildcard notation for files so I expect them to have strictly defined extensions. Next I create new role CertSigningLambdaRole and assign policies AWSLambdaBasicExecutionRole and newly created CertSigningLambdaS3Policy.

You can find JSON with this policy on my GitHub.

Adding access to S3 service from Lambda function to the code

In this version of application I will modify part of codes responsible for reading and writing files. Remember what we are adding is access to S3 from Lambda. First of all we need to initiate variable that will represent our connection to S3 service.

s3 = boto3.resource('s3')

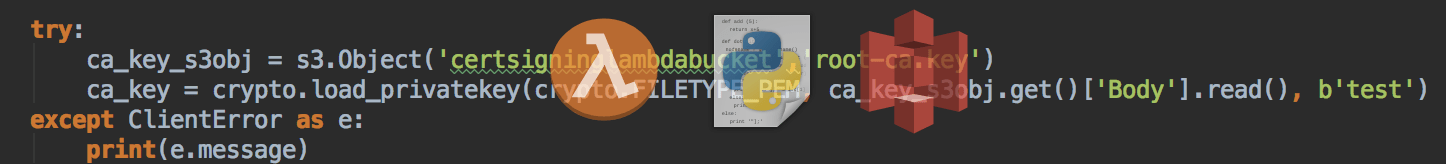

When we try to do any operation on object stored in S3 Bucket we should handle exceptions in case of any errors. Im my code if reading or writing fails I want my application to write full information about the problem into logs. I use try construction to accomplish the task.

try:

ca_cert_s3obj = s3.Object('certsigninglambdabucket','signing-ca.crt')

ca_cert = crypto.load_certificate(crypto.FILETYPE_PEM, ca_cert_s3obj.get()['Body'].read())

except ClientError as e:

print(e.message)

To access S3 object we need to use Object() function from s3 variable. As an arguments we provide bucket name and key (which is the name of the file stored in the bucket). As a result we get variable that is connected to S3 Bucket object or exception. To read content of stored file we need to get Body from the created handler using GET method and perform read() operation on it. That’s how it looks like when we compare previous and current codes side by side.

Access to file in S3 souce code

In same way we perform Write operation on new certificate file, we need to remember to write into Body of the S3 object

try:

s3.Object('certsigninglambdabucket','asav-3.virl.lan.crt').put(Body=crypto.dump_certificate(crypto.FILETYPE_PEM, new_crt))

except ClientError as e:

print(e.message)

So access to S3 service from Lambda is performed just by calling boto3 function instead of opening local file. There is not much change in the logic because S3 objects are treat in our code same as files. We just need to remember about handling the exceptions.

Execute new code

Finally we can now create new Lambda function or update the code of one. Please notice that comparing to code from previous chapter I’ve changed file name to CertSigningS3.py and funtion that we need to call is main() now. So we need to define Handler as CertSigningS3.main to make this work. We also need to assign newly created CertSigningLambdaRole IAM role to this Lambda function. If we forget that or forget to assign all required permissions we can expect error message. Thanks to botocore library it will be in nice user-readable format

An error occurred (AccessDenied) when calling the PutObject operation: Access Denied

As a result of proper execution of our function we will find new file in our S3 Bucket with signed certificate. The file name will be the same as we statically specified in our code – asav-3.virl.lan.crt. Seems like we’re done, we just added access to S3 service from Lambda and we can read files from there and write new ones.

Full code of application from this chapter is available on my GitHub.

Summary

In this chapter I showed you how to Access to S3 service from Lambda. We extended the code adding connection to S3 Bucket where we store files then we read and write into this buket. Using boto3 library is not that hard, all functions are really well documented. Finally we executed our function and as a result new file appeared in the bucket. In addition we have now exceptions handling in our code.

When accessing other AWS service we need to remember about following aspects:

- We need to create IAM policy that will allow Lambda to perform operations on other services. This policy must be as restricted as possible for security reasons. Remember to attach this policy to IAM role

- Use boto3 library to access other services

- You need to handle exceptions in your code, botocore library provide nice framework for this